“Fair use is the great white whale of American copyright law. Enthralling, enigmatic, protean, it endlessly fascinates us even as it defeats our every attempt to subdue it.” – Paul Goldstein

__________________________

This is the second in a 3-part series of posts on Large Language Models (LLMs) and copyright. (Part 1 here)

In this post I’ll turn to a controversial and important legal question: does the use of copyrighted material in training LLMs for generative AI constitute fair use? This analysis requires a nuanced understanding of both copyright fair use and the technical aspects of LLM training (see Part 1). To examine this complex issue I’ll look at recent relevant case law and consider potential solutions to the legal challenges posed by AI technology.

Introduction

The issue is this: generative AI systems – systems that generate text, graphics, video, music – are being trained without permission on copies of millions of copyrighted books, artwork, software and music scraped from the internet. However, as I discussed in Part 1 of this series, the AI industry argues that the resulting models themselves are not infringing. Rightsholders argue that even if this is true (and they assert that it is not), the use of their content to train AI models is infringing, and that is the focus of this post.

To put this in perspective, consider where AI developers get their training data. It’s generally acknowledged that many of them have used resources such as Common Crawl, a digital archive containing 50 billion web pages, and Books3, a digital library of thousands of books. While these resources may contain works that are in the public domain, there’s no doubt that they contain a huge quantity of works that are protected by copyright.

In the AI industry, the thirst for this data is insatiable – the bigger the language models, the better they perform, and copyrighted works are an essential component of this data. In fact, the industry is already looking at a “data wall,” the time when they will run out of data. They may hit that wall in the next few years. If copyrighted works can’t be included in training data, it will be even sooner.

Rightsholders assert that the use of this content to train LLMs is outright, massive copyright infringement. The AI industry responds that fair use – codified in 17 U.S.C. § 107 – covers most types of model training where, as they assert, the resulting model functions differently than the input data. This is not just an academic difference – the issue is being litigated in more than a dozen lawsuits against AI companies, attracting a huge amount of attention from the copyright community.

No court has yet ruled on whether fair use protects the use of copyright-protected material as training material for LLMs. Eventually, the courts will answer this question by applying the language of the statute and the court decisions applying copyright fair use.

Legal Precedents Shaping the AI Copyright Landscape

To understand how the courts are likely to evaluate these cases we need to look at four recent cases that have shaped the fair use landscape: the two Google Books cases, Google v. Oracle, and Warhol Foundation v. Goldsmith. In addition the courts are likely to apply what is known as the “intermediate copying” line of cases.

The Google Books Cases. Let’s start with the two Google Books cases, which in many ways set the stage for the current AI copyright dilemma. The AI industry has put its greatest emphasis on these cases. (OpenAI: “Perhaps the most compelling case on point is Authors Guild v. Google”).

Authors Guild v. Google and Author’s Guild v. Hathitrust. In 2015, the Second Circuit Court of Appeals decided Authors Guild v. Google, a copyright case that had been winding through the courts for a decade. Google had scanned millions of books without permission from rightsholders, creating a searchable database.

The Second Circuit held that this was fair use. The court’s decision hinged on two key points. First, the court found Google’s use highly “transformative,” a concept central to fair use. Google wasn’t reproducing books for people to read; it was creating a new tool for search and analysis. While Google allowed users to see small “snippets” of text containing their search terms, this didn’t substitute for the actual books. Second, the court found that Google Books was more likely to enhance the market for books than harm it. The court also emphasized the immense public benefit of Google Books as a research tool.

A sister case in the Google Books saga was Authors Guild v. HathiTrust, decided by the Second Circuit in 2014. HathiTrust, a partnership of academic institutions, had created a digital library from book scans provided by Google. HathiTrust allowed researchers to conduct non-consumptive research, such as text mining and computational analysis, on the corpus of digitized works. Just as in Google Books, the court found the creation of a full-text searchable database to be a fair use, even though it involved copying entire works. Importantly, the court held this use of the copyrighted books to be transformative and “nonexpressive.”

The two cases were landmark fair use decisions, especially for their treatment of mass digitization and nonexpressive use of copyrighted works – a type of use that involves copying copyrighted works but does not communicate the expressive aspects of those works.

These two cases, while important, by no means guarantee the AI industry the fair use outcome they are seeking. Reliance on Google Books falters given the scope of potential output of AI models. Unlike Google Books’ limited snippets, LLMs can generate extensive text that may mirror the style and substance of copyrighted works in their training data. This raises concerns about market harm, a critical factor in fair use analysis, and whether LLM-generated content could eventually serve as a market substitute for the original works. The New York Times argues just this in its copyright infringement case against OpenAI and Microsoft.

Hathitrust is an even weaker precedent for LLM fair use. The Second Circuit held that HathiTrust’s full-text search “posed no harm to any existing or potential traditional market for the copyrighted works.” LLMs, in contrast, have the potential to generate content that could compete with or substitute for original works, potentially impacting markets for copyrighted material. Also, HathiTrust was created by universities and non-profit institutions for educational and research purposes. Commercial LLM development may not benefit from the same favorable consideration under fair use analysis.

In sum, the significant differences in purpose, scope, and potential market impact make both Google Books and Hathitrust imperfect authorities for justifying the comprehensive use of copyrighted materials in training LLMs.

Google v. Oracle. Fast forward to 2021 for another landmark fair use case, this time involving software code. In Google v. Oracle, the Supreme Court held that Google’s copying of 11,500 lines of code from Oracle’s Java API was intended to facilitate interoperability, and was fair use.

The Court found Google’s “purpose and character” was transformative because it “sought to create new products” and was “consistent with that creative ‘progress’ that is the basic constitutional objective of copyright itself.” The Court also downplayed the market harm to Oracle, noting that Oracle was “poorly positioned to succeed in the mobile phone market.”

This decision seemed to open the door for tech companies to make limited use of some copyrighted works in the name of innovation. However, the case’s focus on functional code limits its applicability to LLMs, which are trained on expressive works like books, articles, and images. The Supreme Court explicitly recognized the inherent differences between functional works, which lean towards fair use, and expressive creations at the heart of copyright protection. So, again, the AI industry will have difficulty deriving much support from this decision.

And, before we could fully digest Oracle’s implications for fair use, the Supreme Court threw a curveball.

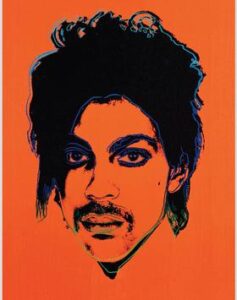

Andy Warhol Foundation v. Goldsmith. In 2023, the Court decided Andy Warhol Foundation v. Goldsmith (Warhol), a case dealing with Warhol’s repurposing of a photograph of the musician Prince. While the case focused specifically on appropriation art, its core principles resonate with the ongoing debate surrounding LLMs’ use of copyrighted materials.

The Warhol decision emphasizes a use-based approach to fair use analysis, focusing on the purpose and character of the defendant’s use, particularly its commercial nature, and whether it serves as a market substitute for the original work. This emphasis on commerciality and market substitution poses challenges for LLM companies defending the fair use of copyrighted works in training data. The decision underscores the importance of considering potential markets for derivative works. As the use of copyrighted works for AI training becomes increasingly common, a market for licensing such data is emerging. The existence of such a market, even if nascent, could weaken the argument that using copyrighted materials for LLM training is a fair use, particularly when those materials are commercially valuable and readily licensable

whether it serves as a market substitute for the original work. This emphasis on commerciality and market substitution poses challenges for LLM companies defending the fair use of copyrighted works in training data. The decision underscores the importance of considering potential markets for derivative works. As the use of copyrighted works for AI training becomes increasingly common, a market for licensing such data is emerging. The existence of such a market, even if nascent, could weaken the argument that using copyrighted materials for LLM training is a fair use, particularly when those materials are commercially valuable and readily licensable

The “Intermediate Copying” Cases. I also expect the AI industry to rely on the case law on “intermediate copying.” In this line of cases the users copied material to discover unprotectable information or as a minor step towards developing an entirely new product. So the final output – despite using copied material as an intermediate step – was noninfringing. In these cases the “intermediate use” was held to be fair use. See Sega v. Accolade (9th Cir. 1992) (defendant copied Sega’s copyrighted software to figure out the functional requirements to make games compatible with Sega’s gaming console). Sony v. Connectix (9th Cir. 2000)(defendant used a copy of Sony’s software to reverse engineer it and create a new gaming platform on which users could play games designed for Sony’s gaming system).

AI companies likely will argue that, just as in these cases, LLMs study language patterns as part of the process of transforming intermediate copying into noninfringing materials. Rightsholders likely will argue that whereas in those cases the copiers sought to study functionality or create compatibility, the scope and nature of use and the resulting product are vastly different from LLM fair use. I expect rightsholders will have the better argument on these cases.

Applying Legal Precedents to AI

So, where does this confusing collection of cases leave us? Here’s a summary:

The Content Industry Position – in a Nutshell: Rightsholders argue that – even assuming that the final LLM model does not contain expressive content (which they dispute) – the use of copyrighted works to train LLMs is an infringement not excused by fair use. They argue that all four fair use factors weigh against AI companies:

– Purpose and character: Many (but not all) AI applications are commercial, which cuts against the industries’ fair use argument, especially in light of Warhol’s emphasis on commercial purpose and the potential licensing market for training data. The existence of a licensing market for training datasets suggests that AI companies can obtain licenses rather than rely on fair use defenses. This last point – market effect – is particularly important in light of the Supreme Court’s holding in Andy Warhol.

– Nature of the work: Unlike the computer code in Google v. Oracle, which the Supreme Court noted receives “thin” protection, the content ingested by AI companies contains highly creative works like books, articles, and code. This distinguishes Oracle from AI training, and cuts against fair use.

– Amount used: Entire works are copied, a factor that weighs against fair use.

– Market effect: End users are able to extract verbatim content from LLMs, harming the market for original works and, as noted above , harming current and future AI training licensing markets.

The AI Industry Position – in a Nutshell. The AI industry will argue that the use of copyrighted works should be considered fair use:

– Transformative Use: The AI industry argues that AI training creates new tools with different purposes from the original works, using copyright material in a “nonexpressive” way. AI developers draw parallels to “context shifting” fair use cases dealing with search engines and digital libraries, such as the Google Books project, arguing AI use is even more transformative. I expect them to rely on Google v. Oracle to argue that, just as Google’s use of Oracle’s API code was found to be transformative because it created something new that expanded the use of the original code (the Android platform), AI training is transformative, as it creates new systems with different purposes from the original works. Just as the Supreme Court emphasized the public benefit of allowing programmers to use their acquired skills, similarly AI advocates are likely to highlight the broad societal benefits and innovation enabled by LLMs trained on diverse data.

– Intermediate Copying. AI proponents will support this argument by pointing to the “intermediate copying” line of cases, which hold that using copyrighted works for purposes incidental to a nonexpressive purpose (creating the non-infringing model itself), is permissible fair use.

– Market Impact: AI proponents will argue that AI training, and the models themselves, do not directly compete with or substitute for the original copyrighted works.

– Amount and Substantiality: Again, relying on Google v. Oracle, AI proponents will note that despite Google copying entire lines of code, the Court found fair use. This will support their argument that copying entire works for AI training doesn’t preclude fair use if the purpose is sufficiently transformative.

– Public Benefit: In Google v. Oracle the Court showed a willingness to interpret fair use flexibly to accommodate technological progress. AI proponents will rely on this, and argue that applying fair use to AI training has social benefits and aligns with copyright law’s goal of promoting progress. The alternative, restricting access to training data, could significantly hinder AI research and development. (AI “doomers” are unlikely to be persuaded by this argument).

– Practical Necessity: Given the vast amount of data needed, obtaining licenses for all copyrighted material used in training is impractical, impossible or would be so expensive that it would stifle AI development.

As I noted above, It’s important to note that, as alleged in several of the lawsuits filed to date, some generative AI models have “memorized” copyrighted materials and are able to output them in a way that could substitute for the copyrighted work. If the outputs of a system can infringe, the argument that the system itself does not implicate copyright’s purposes will be significantly weakened.

While Part 3 of this series will explore these output-related issues in depth, it’s important to recognize the intrinsic link between these concerns and input-side training challenges. In assessing AI’s impact on copyright law courts may adopt a holistic approach, considering the entire content lifecycle – from data ingestion to LLMs to final output. This interconnected perspective reflects the complex nature of AI systems, where training methods directly influence both the characteristics and potential infringement risks of generated content.

Potential Solutions and Future Directions

As challenging as these issues are, we need to start thinking about practical solutions that balance the interests of AI developers, content creators, and the public. Here are some possibilities, along with their potential advantages and drawbacks.

Licensing Schemes: One proposed solution is to develop comprehensive licensing systems for AI training data, similar to those that exist for certain music uses. This could provide a mechanism for compensating creators while ensuring AI developers have access to necessary training data.

Proponents argue that this approach would respect copyright holders’ rights and provide a clear framework for legal use. However, critics rightly point out that implementing such a system would be enormously complex and impractical. The sheer volume of content used in AI training, the difficulty of tracking usage, and the potential for exorbitant costs could stifle innovation, particularly for smaller AI developers.

New Copyright Exceptions: Another approach is to create specific exemptions for AI training, perhaps limited to non-commercial or research purposes. This could be similar to existing fair use exceptions for research and could promote innovation in AI development. The advantage of this approach is that it provides clarity and could accelerate AI research. However, defining the boundaries of “non-commercial” use in the rapidly evolving AI landscape could prove challenging.

International Harmonization: Given the global nature of AI development, the industry may need to work towards a unified international approach to copyright exceptions for AI. This could involve amendments to international copyright treaties or the development of new AI-specific agreements. However, international copyright negotiations are notoriously slow and complex. Different countries have varying interests and legal traditions, which could make reaching a consensus difficult.

Technological Solutions: We should also consider technological approaches to addressing these issues. For instance, AI companies could develop more sophisticated methods to anonymize or transform training data, making it harder to reconstruct original works on the “output” side. They could also implement filtering systems to prevent the output of copyrighted material. While promising, these solutions would require significant investment and might not fully address all legal concerns. There’s also a risk that overzealous filtering could limit the capabilities of AI systems.

Hybrid Approaches: Perhaps the most promising solutions will combine elements of the above approaches. For example, we could see a tiered system where certain uses are exempt, others require licensing, and still others are prohibited. This could be coupled with technological measures such as synthetic training data, and international guidelines.

Market-Driven Solutions: As the AI industry matures, we are likely to see the emergence of new business models that naturally address some of these copyright concerns. For instance, content creators might start producing AI-training-specific datasets, or AI companies might vertically integrate to produce their own training content. X’s Grok AI product and Meta are examples of this.

As we consider these potential solutions, it’s crucial to remember that the goal of copyright law is to foster innovation while fairly compensating creators and respecting intellectual property rights. Any solution will likely require compromise from all stakeholders and will need to be flexible enough to adapt to rapidly changing technology.

Moreover, these solutions will need to be developed with input from a diverse range of voices – not just large tech companies and major content producers, but also independent creators, smaller AI startups, legal experts, and public interest advocates. The path forward will require creativity, collaboration, and a willingness to rethink traditional approaches to copyright in the artificial intelligence age.

Conclusion – The Road Ahead

The intersection of AI and copyright law presents complex challenges that resist simple solutions. The Google Books cases provide some support for mass digitization and computational use of copyrighted works. Google v. Oracle suggests courts might look favorably on uses that promote new and beneficial AI technologies. But Warhol reminds us that transformative use has limits, especially in commercial contexts.

For AI companies, the path forward involves careful consideration of training data sources and potential licensing arrangements. It may also mean being prepared for legal challenges and working proactively with policymakers to develop workable solutions.

For content creators, it’s crucial to stay informed about how your work might be used in AI training. There may be new opportunities for licensing, but also new risks to consider.

For policymakers and courts, the challenge is to strike a balance that fosters innovation while protecting the rights and incentives of creators. This may require rethinking some fundamental aspects of copyright law.

The relationship between AI and copyright is likely to be a defining issue in intellectual property law for years to come. Stay tuned, stay informed, and be prepared for a wild ride.

Continue with Part 3 of this series here.