“AI models are what’s known in computer science as black boxes: You can see what goes in and what comes out; what happens in between is a mystery.”

Trust but Verify: Peeking Inside the “Black Box” of Machine Learning

In December 2023, The New York Times filed a landmark lawsuit against OpenAI and Microsoft, alleging copyright infringement. This case, along with a number of similar cases filed against AI companies, brings to the forefront a fundamental challenge in applying traditional copyright law to a revolutionary technology: Large Language Models (LLMs). Perhaps more than any copyright case that precedes them, these cases grapple with a form of alleged infringement that defies conventional legal analysis.

This article is the first in a three-part series that will examine the copyright implications of the AI development process.

Disclaimer: I’m not a computer or AI scientist. However, neither are the judges and juries that will be asked to apply copyright law to this technology, or the legislators that may enact laws regulating it. It’s unlikely that they will go much beyond the level of detail I’ve used here.

What are Large Language Models (LLMs)?

Large Language Models, or LLMs, are gargantuan AI systems that use a vast corpus of training data and billions to trillions of parameters. They are designed to understand, generate, and manipulate human language. They learn patterns from the data, allowing them to perform a wide range of language tasks with remarkable fluency. Their inner workings are fundamentally different from any previous technology that has been the subject of copyright litigation, including traditional computer software.

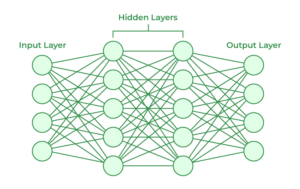

LLMs typically use transformer-based neural networks: interconnected nodes organized into layers that can perform computations. The strengths of these connections—the influences that nodes have on another—are what is learned during training. These are called the model parameters or weights, and they are represented as numbers.

Here’s a simplified explanation of what happens when you use an AI like a large language model:

- You input a prompt (your question or request).

- The computer breaks down your prompt into smaller pieces called tokens. These can be words, parts of words, or even individual characters.

- The AI processes these tokens through its neural network – imagine this like a complex web of connections. Each part of this network analyzes the tokens and figures out how they relate to each other.

- As it processes, the AI predicts the probability distribution for the next token based on what it learned during its training.

- The LLM selects tokens based on these probabilities and combines them to create a coherent response or output for you, the user.

The “large” in Large Language Models primarily refers to the enormous number of parameters these models contain – sometimes in the trillions. These parameters represent the model’s learned patterns and relationships, fine-tuned through exposure to massive amounts of text data. While larger and more diverse high-quality datasets can lead to better AI models, other factors such as model architecture, training techniques, and fine-tuning also play important roles in model performance.

How Do AI Companies Obtain Their Training Data?

AI companies employ various methods to acquire this data –

– Web scraping and crawling. One of the primary methods of data acquisition is web scraping – the automated process of extracting data from websites. AI companies deploy sophisticated crawlers that systematically browse the internet, copying text from millions of web pages. This method allows for the collection of diverse, up-to-date information but raises questions about the use of copyrighted material without explicit permission.

– Partnerships and licensing agreements. Some companies enter into partnerships or licensing agreements to access high-quality, curated datasets. For instance, OpenAI has partnered with organizations like the Associated Press to use its news archives for training purposes.

– Public datasets and academic corpuses. Many LLMs are trained, at least in part, on publicly available datasets and academic text collections. These might include Project Gutenberg’s collection of public domain books, scientific paper repositories, or curated datasets like the Common Crawl corpus.

– User-generated content. Platforms that interact directly with users, such as ChatGPT, can potentially use the conversations and inputs from users to further train and refine their models. This practice raises privacy concerns and questions about the ownership of user-contributed data.

In the context of the New York Times lawsuit, it’s worth noting that OpenAI, like many AI companies, has not publicly disclosed the full extent of its training data sources. However, it’s widely believed that the company uses a combination of publicly available web content, licensed datasets, and partnerships to build its training corpus. The lawsuit alleges that this corpus includes copyrighted New York Times articles, obtained without permission or compensation.

The Training Process: How Machines “Learn” From Data

Once acquired, the raw data undergoes several processing steps before it can be used to train an LLM –

– Data preprocessing and cleaning. The first step involves cleaning the raw data. This includes removing irrelevant information, correcting errors, and standardizing the format. This may involve stripping away HTML tags, removing advertisements, or filtering out low-quality content.

– Tokenization and encoding. Next, the text is broken down into smaller units called tokens. These might be words, parts of words, or even individual characters. Each token is then converted into a numerical representation that the AI can process. This step is crucial as it determines how the model will interpret and generate language.

During training, the LLM is exposed to this preprocessed data, learning to predict patterns and relationships between tokens. This is an iterative process where the model makes predictions, compares them to the actual data, and adjusts its internal parameters to improve accuracy. This process, known as “backpropagation,” is repeated billions of times across the entire dataset. In a large LLM this can take months, operating 24/7 on a massive system of graphics processing chips.

The Transformation From Text to Numbers

For purposes of copyright law, here’s the crux of the matter: the AI industry asserts that after this process, the original text no longer exists in any recognizable form within the LLM. The model becomes a vast sea of numbers, with no direct correspondence to the original text. If true, this transformation creates a fundamental challenge for copyright law –

– No Side-by-Side Comparison: In traditional copyright cases, courts rely heavily on comparing the original work side-by-side with the allegedly infringing material. With LLMs, this is impossible. You can’t “read” an LLM or print it out for comparison.

– Black Box Nature: The internal workings of LLMs are often referred to as a “black box.” Even the developers may not fully understand how the model arrives at its outputs.

– Dynamic Generation: The AI industry claims that LLMs don’t store and retrieve text in a conventional database format; they generate it dynamically based on learned patterns. This means that any similarity to copyrighted material in the output is a result of statistical prediction, not direct copying.

– Distributed Information: The AI industry claims that Information from any single source is distributed across countless parameters in the model, making it impossible to isolate the influence of any particular work.

However, copyright owners do not concede that completed AI models (as distinct from the training data) are only abstracted statistical patterns of the training data. Rightsholders assert that LLMs do indeed retain the expressions of the original works on which they have been trained. There are studies showing the LLM models are able to regurgitate their training materials, and the New York Times lawsuit against OpenAI and Microsoft shows 100 examples of this. See also Concord Music Group v. Anthropic (alleging that song lyrics can be accessed verbatim or near-verbatim from Claude). Rightsholders argue that this could only occur if the models encode the expressive content of these works.

Copyright Implications

Assuming the AI developers’ explanation to be correct (if its not the infringement case against them is strong), AI technology creates unprecedented challenges for copyright law –

– Proving Infringement: How can a plaintiff prove infringement when the allegedly infringing material can’t be directly observed or compared?

– Fair Use Analysis: Traditional fair use factors, such as the amount and substantiality of the portion used, become difficult to apply when the “portion used” is transformed beyond recognition.

– Substantial Similarity: The legal test of “substantial similarity” between works becomes almost meaningless in the context of LLMs.

– Expert Testimony: Courts will likely have to rely heavily on expert testimony to understand the technology, but even experts may struggle to definitively prove or disprove infringement.

For all of these reasons, to prove copyright infringement plaintiffs such as the New York Times may be limited to claiming copyright infringement based on the “intermediate” copies that are used in the training process and user-prompted output, rather than the LLM models themselves.

Conclusion

The NYT v. OpenAI case and others raising the same issue highlight a fundamental mismatch between traditional copyright law and the reality of LLM technology and the AI industries’ fair use defense. The outcome of this case could reshape our understanding of copyright in the digital age, potentially requiring new legal tests and standards that can account for the invisible, transformed nature of information within AI systems.

Part 2 in this series will focus on the legal issues around the “input problem” of using copyrighted material for training. Part 3 will look at the “output problem” of AI-generated content that may copy or resemble copyrighted works, including what the AI industry calls “memorization.” As we’ll see, each of these issues presents its own unique challenges in the context of a technology that defies traditional legal analysis.

Continue reading Part 2 and Part 3 of this series.